Mindful Tech: Teaching Kids Self-Regulation in the Screen Age

Kids don’t learn to ride a bike by being kept off it.

They learn with training wheels.

We applied the same principle to digital habits.

-

Overview

Challenge

Kids get phones before they can self-regulate, while platforms are engineered to keep them hooked. Most tools block use instead of teaching habits, leaving children unprepared when limits come off.

Outcome

Across eight one-week sprints with a rotating global volunteer team, we reframed the problem from control to coaching, tested early concepts, analyzed the market, and built a research-backed foundation for teaching real self-regulation skills.

-

Scope & Role

Duration

Eight sprints (Mar–Jun 2024)Team

~150 participants →

~30 core collaboratorsRole

Research & Strategy Lead, UX Designer

Led research, synthesis, and alignment across a dynamic, global team, ensuring decisions stayed grounded in evidence and developmental psychology. -

Key Contributions

Built shared research systems to align 150+ contributors

Reframed the problem using child-development & addiction science

Led consistent parent usability testing & shared synthesis

Called a strategic pause to realign around validated problems

Conducted competitive audit & introduced “training-wheels” autonomy model

Co-designed prototype flows, protected intent in testing

Practiced servant leadership to support rotating contributors and maintain momentum

-

Project Outcomes

Reframed the challenge from screen-time restriction to building self-regulation skills

Defined clear parent + child problem statements grounded in developmental needs

Identified market gap: most tools block or monitor; few teach habits and autonomy

Learned playful mechanics engage, but reflection cues must be more explicit

Built scalable research workflows that supported a rotating international team

Set next-test criteria for validating staged habit-building and autonomy scaffolding

Phase 1 | Sprints 1–4

Discovery & Early Prototyping

The Problem

Since she got her phone, Emma, 11, is always scrolling. Every night, the same battle: her parents ask her to put it down…

Sometimes it's "Just one more video?"

Sometimes it's "But all my friends are online!"

Parental controls either block everything or nothing. When limits are enforced, Emma feels punished and her parents feel guilty.

The problem felt urgent and solvable.

We framed our exploration with this question:

How might we mitigate the potentially addicting effects of social media in children given their brains are not fully developed yet?

Sprints 1 & 2 | Discovery Research Foundation

Project Context

~150–200 global contributors, fully remote, working live and asynchronously.

One-week sprints demanded rapid insight generation, alignment, and traceability: I helped adapt Agile UX practices to ensure the team stayed coordinated and insights were visible across time zones.

Goal

Understand children’s social media use and parental challenges to identify evidence-based intervention opportunities.

Process | Turning Chaos into Alignment

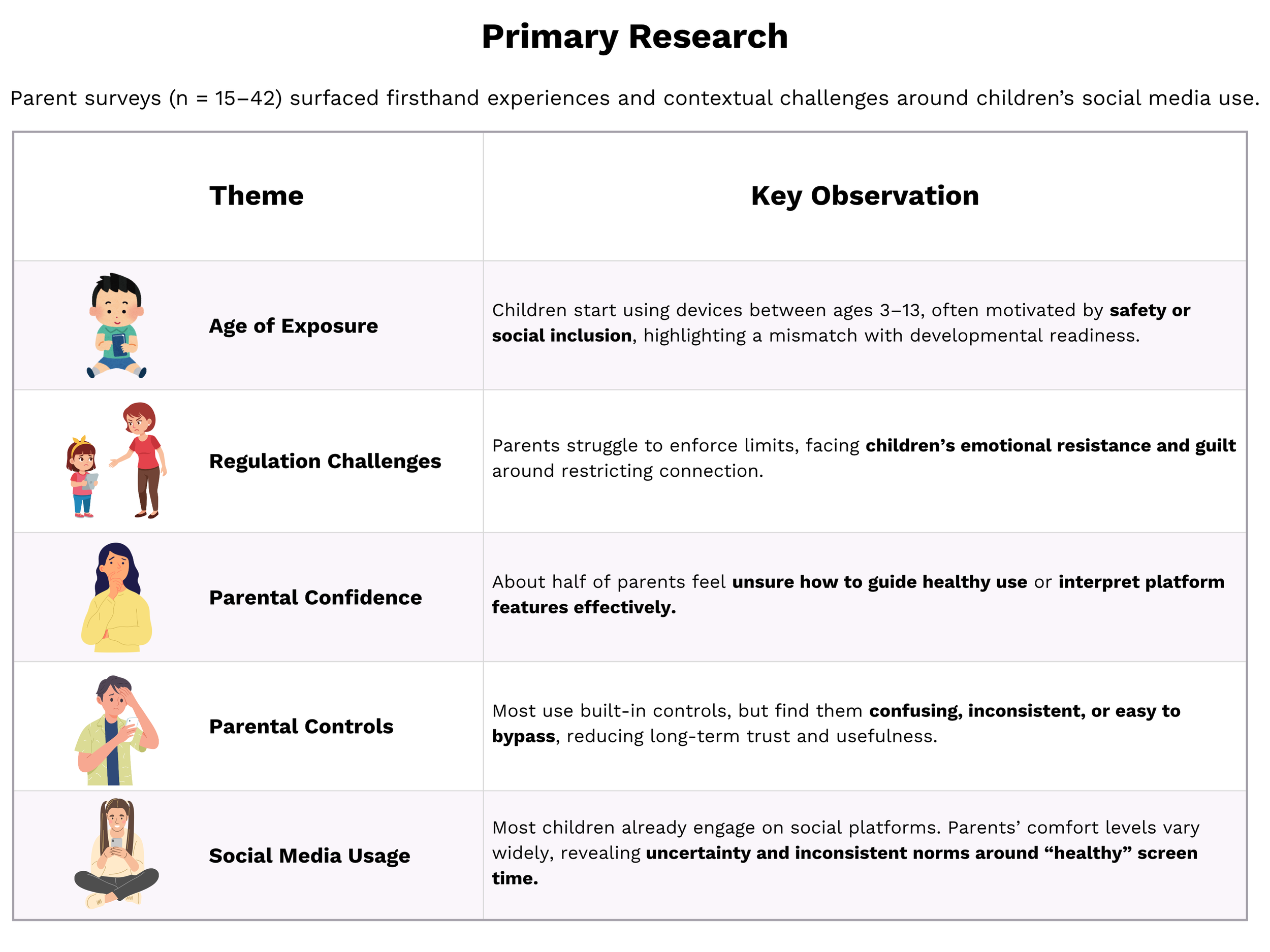

Conducted primary (parent survey) and secondary research (cognitive development, addiction, behavioral interventions).

Organized shared FigJam research tables to centralize findings and maintain traceability from assumptions → insights → HMWs. (artifact 1)

Translated insights into actionable How Might We (HMW) statements, creating a prioritized backlog of opportunities. (artifact 2)

Used affinity mapping and dot-voting to cluster and prioritize HMWs, aligning the team on key opportunity areas for hypothesis testing: parent-child connection, self-awareness and education, self-control, and mental health support. Dot voting prioritized 6 HMW. (artifact 2)

1. Research Compilation FigJam Tables

2. HMW Backlog, Affinity Mapping, Dot Voting Results

My Role | Discovery Lead

Transformed scattered insights into focused intervention opportunities by leading research strategy, cross-team facilitation, and synthesis across 150+ remote participants.

Research Strategy & Hypothesis Development

Hypothesized that parental controls fail because apps engineer addiction, requiring an addiction-recovery approach: children must become aware of their habits before change can begin

Validated through secondary research into cognitive development, addiction science, and behavioral intervention models

Reframed problem from external control to self-awareness, redirecting solution exploration

Research Systems & Cross-Team Alignment

Maintained shared research artifacts and continuously refined HMWs for clarity and continuity

Sustained asynchronous collaboration through FigJam, Discord, and structured follow-ups

Clarified and connected findings across secondary and primary research teams as insights evolved sprint by sprint

Servant Leadership

Naturally assumed facilitative leadership through organization, curiosity, and initiative

Cultivated enthusiasm and ownership, making behavioral science exploration collaborative and energizing

Guided team toward shared clarity and evidence-based direction without imposing conclusions

What We Learned | Research Highlights

Our research combined scientific evidence with real-world context:

Secondary research mapped the cognitive, behavioral, and design factors that make children vulnerable and suggested evidence-based interventions.

Primary research made these insights tangible, showing how parents struggle in managing social media use.

What We Prioritized | HMW Statements

Here are the How Might We (HMW) statements from the research backlog the team identified as the most critical challenges.

MUST DO

HMW create limits on features like infinite auto-scrolling, auto-play videos and push notifications to mitigate addiction to social media?

HMW help people overcome social media addiction?

HMW facilitate self-awareness and reflection among users regarding their social media usage, empowering them to identify addictive behaviors and take proactive steps towards healthier digital habits?

SHOULD DO

HMW develop personalized strategies to cultivate self-control in children regarding social media usage, considering their unique interests and susceptibility to addiction?

HMW develop new healthy habits to replace habitual social media use and reshape response to pleasure stimuli

HMW enhance parental control features to make them more robust and resistant to bypass attempts by children?

Sprints 1 & 2 | Key Outcomes

Prioritized Opportunities

6 How Might We (HMW) statements selected to guide hypothesis testing in the next sprints.

Validated Discovery

Three core drivers of screen overuse identified: addictive platform features, limited user self-awareness and self-regulation, and inadequate parental management tools, clarifying where interventions would have the most impact.

Research Foundation Established

Shared research artifacts and HMW backlog provided; a structured starting point for ideation, prototyping, and testing.

Sprints 3 & 4 | Ideation, Prototyping, Testing, and Validation

Context

One-week sprints required hard prioritization. From the six HMWs at the end of Sprint 2, the team used a MoSCoW matrix and dot voting (artifact 1 below) to select one focus:

How might we facilitate self-awareness and reflection among users regarding their social-media usage, empowering them to identify addictive behaviors and take proactive steps toward healthier digital habits?

Goals

Build a functional prototype translating prioritized research insights into a tangible solution

Test and analyze usability and engagement to validate assumptions and gather actionable feedback for rapid iteration

Process | Rapid Prototyping and Validation

Conducted Crazy 8 ideation to generate concepts and select the Tamagotchi-inspired idea: Take care of your pet—use your social media wisely. Keep your pet alive. (artifact 2)

Split the team into two parallel workstreams:

Prototype Team: Defined core features and built Pixel Paws, an interactive Figma prototype visualizing screen time awareness. (artifact 3)

Testing Team: Designed a usability-testing plan, recruited 7 parent participants (due to IRB constraints, we would test the concept with parent proxies for the 8–13 age group, ensuring ethical yet insightful evaluation) and coordinated remote moderated sessions. (artifact 4)

Moderated seven think-aloud sessions to capture user reactions and emotional engagement with pet mechanics and rewards.

Synthesized findings through affinity mapping and atomic insights charting across five dimensions (Pet Animation, Rewards, Meters, App Purpose, UI), transforming observations into refined HMWs for next Sprint priorities. (artifact 4)

1. MoSCoW Matrix & Dot Voting Results

2. Crazy 8 Ideation & Dot Voting

3. Prototyping Plan and Prototype Screenshots

4. Full Usability Testing Package & Atomic UX Research

My Role | Usability Testing Lead

Led the testing workstream from planning through synthesis, coordinating 7 remote sessions with parents of children aged 8–13.

Strategic setup

Translated awareness-focused HMW into testable hypotheses and evaluation metrics

Developed interview script and testing plan aligned with prototype features

Recruited 7 parents of children aged 8–13 for representative insights

Coordination & Execution

Coordinated 7 remote sessions across time zones (moderated 1, supported 6)

Coached team members on protocols, think-aloud capture, and observation techniques

Maintained shared documentation and sustained momentum through sync and async channels

Analysis & Synthesis

Facilitated affinity mapping workshops to surface patterns and implications

Guided atomic insights process, translating raw feedback into actionable findings that shaped next sprint priorities

Ensured insights were interpreted collectively, keeping the team aligned and engaged

Testing Our Assumptions

With Pixel Paws complete, we evaluated whether its awareness-based mechanics achieved their goal: using a playful, emotionally engaging digital pet to make screen time visible and encourage reflection rather than restriction.

The table below maps each interaction to its research question and what we learned across seven usability sessions with parent proxies.

Tested

Tested

Tested

Tested

Tested

Parents expressed interest in the concept for supporting screen-time management but questioned its ability to sustain long-term behavior change without a stronger educational framing.

Broader Insights

Interface elements were only partially understood

(42% UI comprehension), suggesting the need for clearer hierarchy and feedback cues.

Sprints 3 & 4 | Key Outcomes

Gamified Breaks Concept Validated

Playful pet mechanics and rewards successfully captured user attention and motivated interaction, confirming usability and engagement.

Users recognized the break prompts, validating comprehension of the core concept.

Reflection Goal Missed

Goal to build awareness and reflection in users about social media overuse missed: visual and interactive cues intended to signal overuse were misinterpreted or went unnoticed, revealing a gap between design intent and user comprehension.

Scope and Priorities Clarified

Testing revealed gaps in awareness-building through gamification alone, refining the problem focus for the next phase, while other priority HMWs identified in discovery remain open for exploration.

Phase 2 (Sprints 5–8):

Discovery & Early Prototyping

Phase 1 Recap | Questioning Our Solution Space

After testing Pixel Paws, the team was eager to iterate.

Yet I felt we had “failed fast and learned fast” already and refining the prototype would only partially address the problem. After all, awareness-building was just the starting point of a broader solution.

In Phase 1, we'd built a rich research backlog spanning cognitive development, addictive design, existing addiction solutions, and parental struggles.

With 150+ contributors working at speed, I had the uneasy sense we'd moved so fast we might have left key insights behind. Instead of iterating on Pixel Paws, I wanted to revisit that backlog and explore the wider solution space.

And last but not least…because my own son's phone seems glued to his hand, helping kids and families find balance again isn't just design work for me, it's personal.

Sprint 5 | Rebuilding from Research Up

Context

Phase 2 began with about 30 active contributors. As one of three Sprint Masters coordinating the team across time zones, I helped facilitate collaboration through live workshops and asynchronous sessions in Discord and FigJam.

I proposed pausing design to revisit the research backlog and make sense of our findings before moving forward. After discussion and a team vote, we agreed to realign around the core problem before continuing design.

Goal

Consolidate insights from previous sprints to confirm what we knew, uncover what we missed, and set a stronger direction for the next design phase.

Process | Reassessing the Challenge

Translated top-ranked HMWs into clear problem statements to define sharper design targets.

Segmented the new problem statements for Parents and Children to reflect their different needs and experiences.

Held a final dot vote to select one primary problem statement per group, setting Phase 2’s direction for concept testing and design refinement.

Re-evaluated the existing How Might We backlog from primary, secondary, and Phase 1 prototype research.

Updated context and insights were used to refresh the MoSCoW matrix and reprioritize problem areas.

Ran team dot voting to align on the most relevant and impactful HMW questions.

Revisiting the Research | Problem Statements Defined

Most feasible problem statements:

For Parents

Parents struggle to guide their children in developing self-management strategies for social media because children don’t fully understand the negative impacts of excessive use on their mental well-being.

For Children

Preteens aged 10–12 struggle with mobile-device use due to underdeveloped self-management skills, leading to excessive social-media use that harms their development, physical activity, mental health, and sleep.

Sprint 5 | Key Outcomes

Problem Space Clarified

Distinct problem statements for parents and children defined, establishing shared understanding and ensuring all future work stays focused on users’ core needs, preventing misdirection and aligning design decisions.

Core Problems Identified

Preteens’ heightened vulnerability to addictive design and parents’ lack of effective ways to guide them recognized, keeping focus on the real underlying challenges rather than superficial fixes.

Evolved Problem Scope

Project scope broadened beyond Phase 1 problem space of parental-control tools and awareness concepts, opening the way for more comprehensive exploration of how families experience and manage digital use together

Sprint 6 | Competitive Analysis

Context

With problem statements defined in Sprint 5, our goal was to develop MVP and MMP vision boards with parallel teams, one working on parents, one on children. As work progressed, completing the boards proved too complex for most contributors, slowing momentum.

I proposed redirecting my team to a competitive audit instead, grounding our exploration in what exists, where gaps are, and where we could create value.

Goal

Map the competitive landscape to identify what works, what's missing, and where opportunities exist to serve families and preteens better.

Process | Competitive Market Analysis

Pivoted my team from vision boards to a competitive audit of 20+ existing tools.

Selected competitors to ensure broad coverage:

Product types: productivity tools, habit-building and gamified apps, parental control suites, and habit-tracking systems

Target audiences: children (< 13), preteens (12+), teens (13+), parents, young adults (18+), and general all-ages tools

Built a shared evaluation grid to ensure consistent, comparable analysis across all competitors, assessing:

Company / Product Type / Target User

Six core themes: time management, rewards and reinforcement, awareness and education, control mechanisms, engagement and motivation, behavior change and outcomes

Differentiators and opportunities

Conducted SWOT analyses on key competitors to assess positioning and strategic approaches.

Compiled a feature summary mapping how each product addressed (or ignored) the six themes.

Competitive audit table, SWOT analysis table, and features summary table

My Role | Facilitating Analysis and Defining Design Direction

Although I was no longer the formal Sprint Master, I continued to facilitate the audit to keep the team moving. I coordinated the evaluation grid, guided contributors through data collection and synthesis, and supported the new leads as they built confidence.

While reviewing the results below, I saw a consistent gap: most tools focused on controlling behavior rather than helping children learn self-regulation.

To express a testable direction, I introduced a metaphor, the “training wheels” concept.

Building on that idea, I framed my lean hypothesis:

Children will be able to self-manage their social media usage if they learn the skills in stages, with incentives and gradual reduction of control.

I translated this into a dual-interface concept:

Parents begin with higher involvement through control and coaching tools.

Children progress through stages reinforced by rewards tied to skill growth.

As confidence builds, parental oversight recedes and autonomy increases.

This articulation connected insights from the audit, highlighting the gap between control and autonomy, and offered a clear, testable philosophy of progressive autonomy that I shared with the team and that would later be explored in Sprint 7.

Like learning to ride a bike, from tricycle ➔ training wheels ➔ independent balance, children could learn digital self-regulation through scaffolded guidance that fades as skills develop.

Findings | Market Landscape & Opportunity Spaces

Strategic Landscape (SWOT)

Strengths: The market shows strong control mechanisms, high engagement through gamification, and reliable productivity flows.

Weaknesses: Complex UIs, bypassable controls, and minimal educational guidance limit accessibility and long-term learning.

Opportunities: Adaptive, age-sensitive systems and rewards that connect digital progress to real-world behavior.

Threats: A highly saturated space with little differentiation among parental-control and productivity products.

Design and UX Patterns Opportunities

Time management: Focused on blocking and tracking, not teaching skills; younger users underserved.

Rewards: Cosmetic and disconnected from meaningful outcomes; often too mature for children.

Parental tools: One-directional control with little collaboration or shared goals.

Education: Surface-level content, lacking child-facing guidance for gradual autonomy.

Behavior change: Limited evidence beyond testimonials; few adaptive features as children grow.

Market Gap

Products cluster at two extremes: strict control for parents or gamified self-help for motivated older users.

Few support developmental self-regulation that evolves with the user over time.

Sprint 6 | Key Outcomes

Market Understanding Established

Comprehensive SWOT and feature analyses mapped 20+ digital wellness and parental control tools by product type and audience, clarifying what the market offers, where it excels, and where it falls short.

This grounded future strategy in tangible, objective evidence rather than assumption.

Gaps and Benchmarks Defined

Best-in-class features benchmarked: gamified motivation, strong parental controls, and intuitive, age-appropriate interfaces. Key gaps exposed: few tools teach time management, link rewards to effort, foster family collaboration, or evolve as children mature. These insights shaped clear opportunity areas and design priorities for future innovation.

Conceptual Framework Introduced

Research and audit insights synthesized into a testable philosophy of progressive autonomy (the training wheels metaphor), giving the team a shared direction and foundation for Sprint 7 ideation.

Sprint 7 & 8 | MVP Validation and Analysis

Context

Sprint 6’s competitive audit revealed a core opportunity: teaching self-regulation rather than enforcing control.

Fresh from that research and eager to validate the insight, I proposed we pivot from static MMP vision boards to testing a prototype, as the team had been struggling with the complexity of defining parallel vision boards for parents and children.

After a group discussion, we agreed to take an Agile approach: test early, fail fast, and learn quickly. We set aside the MMP boards and focused on an MVP for parents, since IRB restrictions prevented testing with children.

Goal

Move from abstract vision work to tangible validation by testing an early MVP concept with parents. The aim was to observe how they respond to prototype features and gather initial insights to guide future iterations.

Vision Statement

Empower parents to effectively foster healthy screen-time habits and a balanced lifestyle for their children.

Process | From Vision Chaos to MVP Clarity

Pivoted from dual MMPs for parents and children to a single MVP for parents, enabling faster iteration and clearer validation of one audience’s needs. Defined and prioritized a parent-focused problem statement and How Might We for testing. (artifact 1)

Ideated and prioritized solutions through Crazy 8s, MoSCoW, and affinity mapping to align on priorities and prototype scope. (artifact 2)

Built user flows and a low-fidelity Figma prototype while a parallel workstream drafted the research plan, goals, and interview script for early validation.(artifact 2)

Recruited parents of children who use social media and conducted remote moderated concept tests to gather early reactions and usability feedback.

Synthesized results through two different manual affinity mapping, Rainbow Chart, Atomic UX Canvas, and AI-assisted analysis to uncover patterns, contradictions, and opportunities for refinement. (artifact 3)

1. MVP Parents Vision Board and HMW selection

2. Crazy 8 Ideation, selection of concepts to test, prototype user flow

3. Two manual manual affinity maps, Rainbow Chart, Atomic UX Canvas

Findings and Analysis

The Prototype

The problem prioritized for testing was:

Children don’t know how to manage their time because they have never done it before and lack the necessary skills, which makes it hard for parents to help their kids learn how to use social media wisely.

2. We explored this through the guiding question:

How might we provide parents with an easy way to help their children learn fundamental time-management skills, resulting in a balance between online and offline activities?

3. Two ideas were selected for the concept test:

Adjustable parental controls with reminders for children: Parents choose how to respond when limits are exceeded regain control, send encouragement, or issue gentle reminders over three days. (concept I ideated in crazy 8)

Customizable rewards chosen by parents: Parents select or define rewards for children who meet agreed goals.

Prototype Divergence from Approved Concept

Time constraints and workstream misalignment led to testing a different prototype than approved. Both concepts were altered:

Adaptive parental controls with immediate coaching choices became fixed reminders triggered after three days.

Parent-customized rewards became preset generic ones.

This shifted from testing guided autonomy with personalized motivation to delayed enforcement with standardized responses.(see video of the tested prototype.)

Test Context | Data Reliability Limitations

While the prototype revealed useful parental perspectives, several factors limited the reliability and depth of the results:

Prototype divergence: The version tested differed from the approved concept, so participants reacted to a different learning model than the one we aimed to validate.

Participant mismatch: With only four participants, three had children under age seven, meaning feedback reflected speculation about future behaviors rather than lived experience with social-media use.

Research plan misalignment: The research plan’s scope was mismatched to the prototype’s capacity and drifted to topics outside the prototype’s functionality.

This overextension blurred research goals, making it unclear whether the test aimed to assess comprehension, behavior, or long-term impact, and ultimately limited the reliability of insights.

Analysis fragmentation: The team cycled through multiple synthesis methods without consensus: one affinity map continuously reworked asynchronously, a second built on misaligned goals, then rainbow charts, atomic charts, and AI analysis—producing contradictory interpretations.

These limitations undermined test validity: wrong prototype, wrong audience, misaligned research questions, fragmented analysis. Results offered directional signals only, requiring re-testing with correct parameters.

Key Insights from Testing (Directional Only)

Even with reliability limits, a few consistent patterns emerged across all synthesis methods:

Clarity of Control

Parents wanted transparency on how and when limits were enforced. Most expected firm boundaries rather than soft reminders, underscoring the need to clarify where guidance ends and restriction begins.

Customization Matters

Families valued the ability to tailor rewards, activities, and message tone to fit their own routines and children’s personalities.

Collaboration as Motivation

Parents preferred features that invite children into setting goals and rewards, rather than enforcing rules unilaterally.

Real-Time Feedback

Delayed reminders felt disconnected from behavior. Parents wanted immediate feedback when limits were exceeded to reinforce awareness and accountability.

My Role

Coordinating across time zones with limited synchronous touchpoints, gaps emerged between approved concepts and prototype execution. I focused on identifying misalignments early, refining research plans to match what was testable, and documenting process lessons for future sprints.

Strategic Collaboration & Continuity

Helped pivot the team from incomplete MMP vision boards to a parent-focused MVP, grounding the sprint in rapid, testable feedback for faster learning and iteration.

Ideated a concept building directly on Sprint 6's core insight, scaffolded parental guidance to help children build self-regulation, ensuring continuity across sprints.

It was one of two concepts the team selected, and I co-created the initial user flow to bring it to life.

Proactive Realignment & Rigor

When gaps emerged between selected concepts and execution, I worked with the team to clarify the prototype's intended learning goal and refocus on hypothesis verification.

Refined the discussion guide when the research scope exceeded the prototype's capacity, ensuring testing could generate accurate, actionable insights.

Introduced AI-assisted transcript review to triangulate findings, strengthening consistency when manual affinity analyses conflicted.

Servant Leadership & Team Support

Bridged communication gaps during moments of tension or misunderstanding, facilitating constructive dialogue to maintain productive collaboration.

Provided coaching to new leads, fostering confidence and stability across a distributed, cross-time-zone team.

Sprints 7 & 8 | Key Outcomes

Non-Target Insights Documented

Captured directional feedback from four parents of children under 7, explicitly documenting age limitations and framing insights as preliminary hypotheses. Signals around customization, collaboration, and control clarity preserved for potential testing with age-appropriate audiences.

Hypothesis-Test Path Realigned

Next learning plan corrected, aligning audience, prototype, and research questions to properly test adjustable parental support and customizable rewards, ensuring the next sprint evaluates whether gradual autonomy helps children build time-management skills.

Cross-Team Alignment Strengthened

Team alignment strengthened through uncovering and addressing communication and coordination gaps, building the foundation for more effective, consistent delivery and sustainable value creation in future sprints.

Reflections + Next Steps

Reflections

This project reminded me how energized I get in fast-moving, intellectually curious environments. I jumped in fully, collaborating across time zones, synthesizing quickly, and naturally stepping into leadership as we shaped direction.

I also learned to balance strong ideas with shared ownership. When I see a path clearly, I tend to move fast. This experience taught me the value of slowing down just enough so others can get there with me. Influence works best when it feels shared, not steered.

This space matters to me on a personal level. As a parent, I see how overwhelming digital life can be for kids, and how little support exists to help them build healthy tech habits. That made this challenge feel real and urgent, not theoretical.

Most of all, it reminded me why I love UX: digging into human behavior, challenging assumptions, and translating insight into meaningful solutions. That is the work that lights me up.

Next Steps

Although this project wrapped within Tech Fleet, I see a promising future for it. If continued, I would collaborate with child-development and digital-wellbeing experts to shape a safe, evidence-grounded version of the gradual-autonomy model.

Future exploration would include age-appropriate scaffolding for skill-building and ethical testing frameworks that support families without relying on restrictive controls. This is an area I hope to continue exploring in my career, because the problem is real and worth solving.

What Teammates Said

“Alyssa brought so much energy and enthusiasm to our team. Her curiosity and commitment were infectious. She has a talent for getting to the core of any issue and uncovering insights others might miss, and she always pushed our team forward while making sure everyone felt valued.”

Phillip, UX Designer

“Alyssa immersed herself in research and brought a richness to our discussions. She stepped up to lead, made insights actionable, and incited engagement and inclusivity on our team.”

Jessie, UX Designer

“Alyssa always brought a ton of energy and a proactive mindset. Her collaborative spirit and problem-solving abilities stood out. She has an incredible knack for getting to the root of any problem, and her hard work and dedication were evident in everything she did.”

Madina, UX Designer

“Alyssa is incredibly warm and compassionate. Her ability to conduct extensive research and extract valuable insights is truly impressive. She excels in soft skills and maintains a positive and productive work environment.”

Divya, HR Partner